Inference vs Prediction

Generative modeling or predictive modeling?

Bayesian methods make use of Bayes’ theorem to perform statistical inference. Bayes’ law states that a conditional probability can be decomposed in the following way:

\[P(A | B) = \frac{P(B|A) P(A)}{P(B)}\]

where \(A\) and \(B\) indicate two events. The following terms are assigned to each of the quantities:

In statistical modeling, another parameterization is typically used. Let \(X\) indicate the data and \(\Theta\) indicate the model parameters. Then Bayes’ rule can be formulated as follows:

\[P(\Theta | X) = \frac{P(X | \Theta) P(\Theta)}{P(X)}\]

These quantities are interpreted as follows:

The proability of the data, \(P(X)\) can be ignored when we are interested in \(P(\Theta | X)\) merely for model selection since \(P(X)\) is independent of the model.

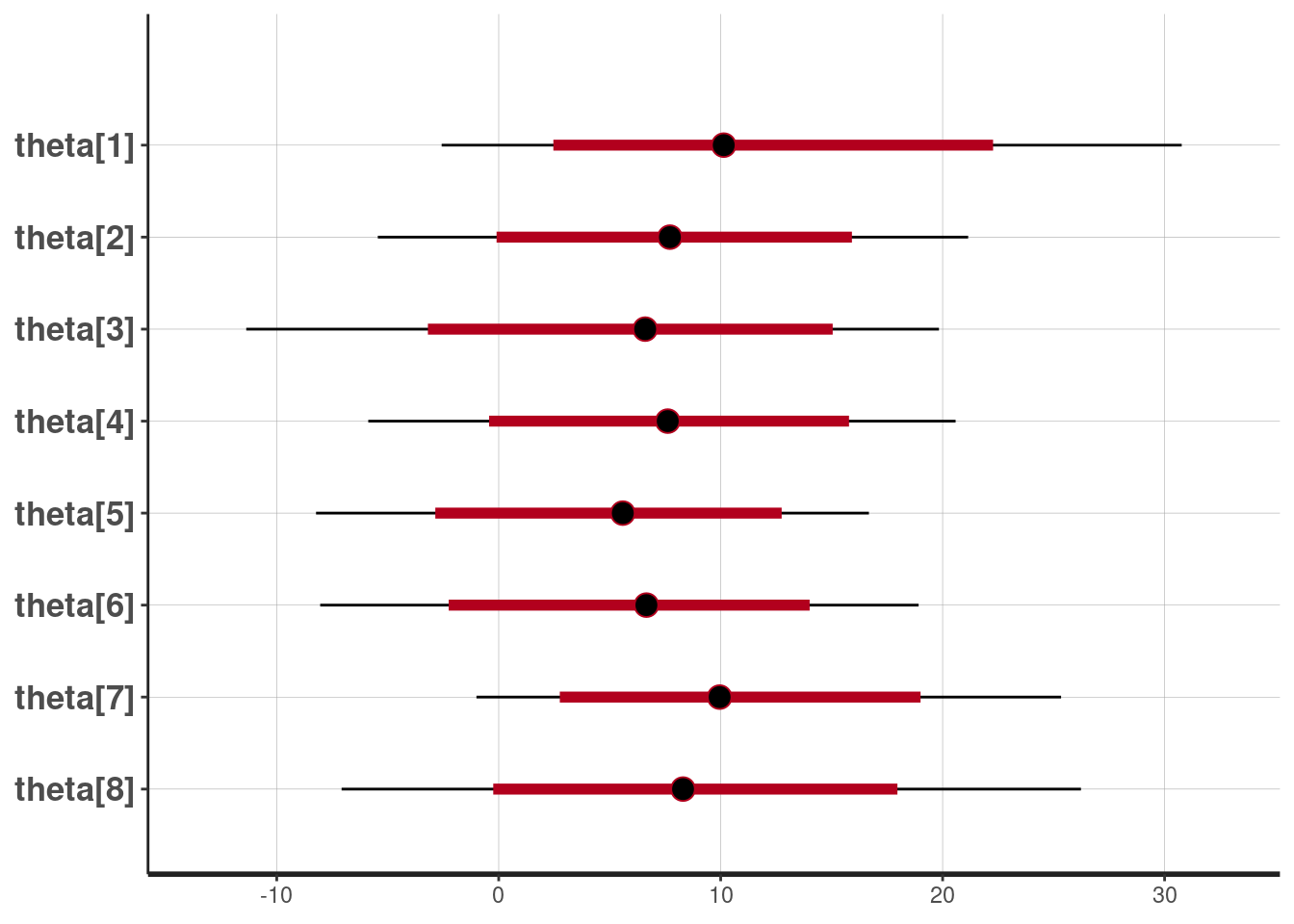

Due to the use of prior knowledge, Bayesian approaches are always parametric in the sense that these methods specify models based on assumptions about the data generation process. A challenge of Bayesian methods is that the posterior distribution may be very hard to compute explicitly, which is why Markov chain monte carlo (MCMC) is often used to sample from the posterior distribution.

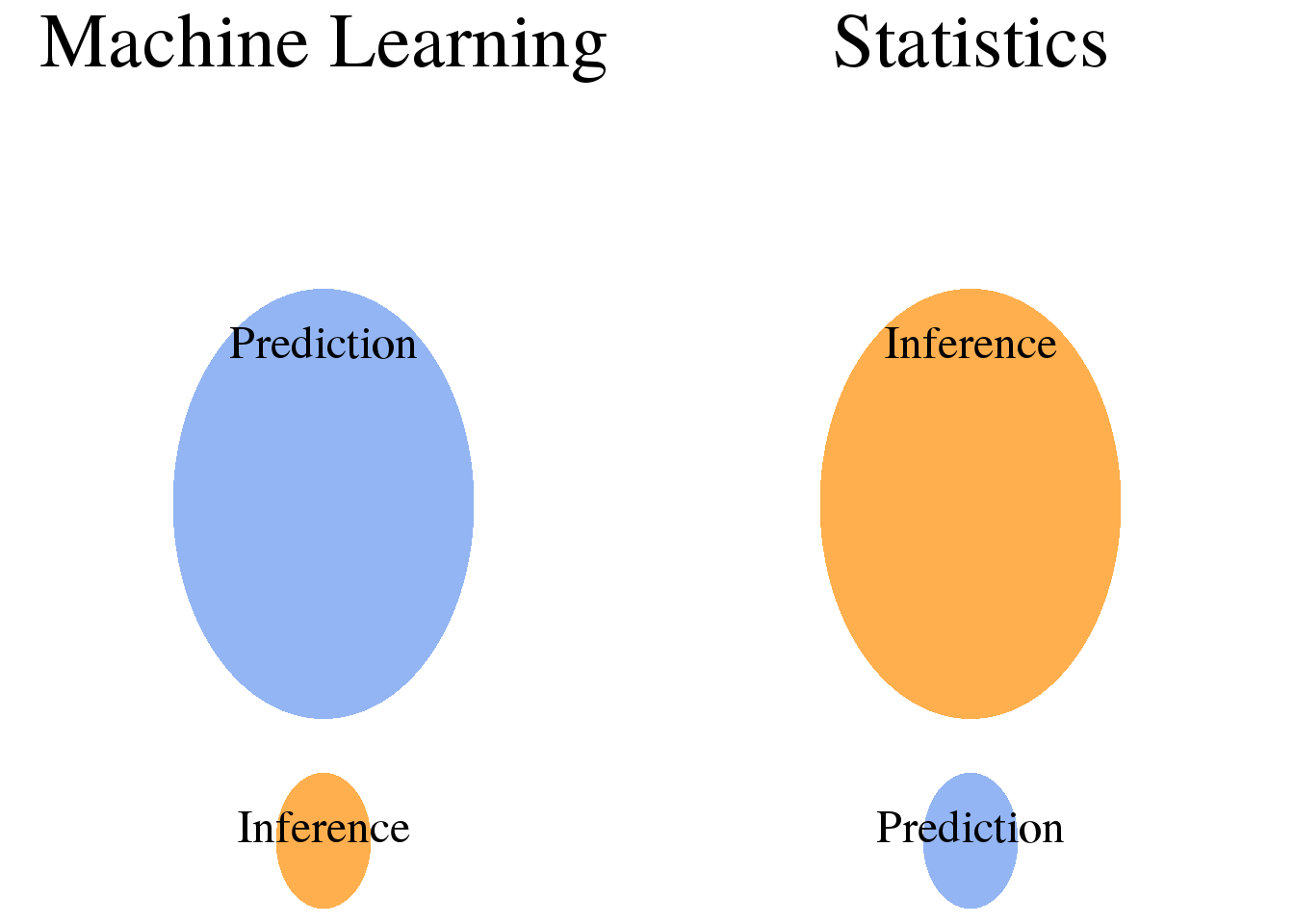

Bayesian methods are concerned with statistical inference rather than prediction. Inference is concerned with learning how the observed outcomes are generated as a function of the data. Prediction, on the other hand, is concerned with building a model that can estimate the outcome for unseen data. Note that there are methods that can be used for both tasks. For example, logistic regression can be used to measure the impact of individual features on the outcome (inference) and to estimate the outcome for new observations (prediction).

In essence, the difference between inference and prediction boils down to model interpretability. If a model is interpretable (i.e. you can understand how the predictions are formed) it probably performs inference, while models that are hard to interpret probably perform prediction. To make the distinction clearer, consider the following examples:

To obtain a better intuition about the differences in the way that Bayesian thinking is different, you should read this great post at Stats Exchange.

The following posts are concerned with Bayesian methods:

Generative modeling or predictive modeling?